The realm of Natural Language Processing (NLP) has witnessed a remarkable evolution with the advent of Large Language Models (LLMs). These colossal models, boasting billions of parameters, have demonstrated remarkable proficiency across various tasks, including text generation, translation, question answering, and code generation (You know it even if I don’t tell you…). However, adapting these powerful yet resource-intensive models to specific downstream tasks presents significant challenges, primarily due to their sheer size and the computational burden associated with full fine-tuning.

A solution? Discover about it in the following sections:

- Understanding Fine-Tuning and Its Challenges

- PEFT: A Paradigm Shift in LLM Adaptation

- Supervised Fine-Tuning (SFT)

- Challenges and Limitations of PEFT

- LoRA: Low-Rank Adaptation

- GaLore: Gradient Low-Rank Projection

- QLoRA: Efficient Fine-tuning of Quantized LLMs

- DoRA: Weight-Decomposed Low-Rank Adaptation

- LoftQ: LoRA-Fine-Tuning-Aware Quantization

- Comparative Performance Summary

- Conclusion

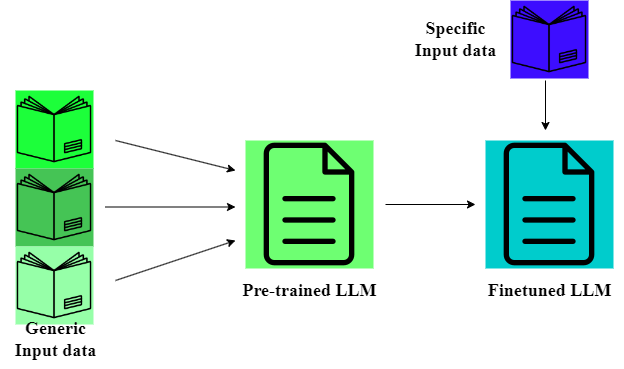

Understanding Fine-Tuning and Its Challenges

Fine-tuning is the process of adapting a pre-trained LLM to perform well on a specific downstream task. This typically (with the full fine-tuning) involves initializing the model with weights learned during pre-training on a massive text corpus and then further training it on a smaller, task-specific dataset . While effective, this process updates all model parameters, leading to several limitations:

- High Computational Cost: Training billions of parameters requires substantial computational resources, often exceeding the capabilities of readily available hardware.

- Storage Overhead: Fine-tuning for multiple tasks necessitates storing multiple copies of the model, each with its unique set of fine-tuned weights, leading to significant storage requirements.

- Slow Task Switching: Switching between different tasks necessitates loading different fine-tuned models, incurring considerable time overhead.

So, these limitations highlight the need for more efficient fine-tuning strategies…

PEFT: A Paradigm Shift in LLM Adaptation

Parameter-Efficient Fine-Tuning (PEFT) techniques address the limitations of full fine-tuning by updating only a small subset of the model’s parameters while keeping the vast majority frozen. This significantly reduces the computational cost, storage overhead, and task switching time.

Supervised Fine-Tuning (SFT)

Supervised Fine-Tuning (SFT) is a specific PEFT approach that involves providing the LLM with labeled examples of the desired task. These examples serve as a guide for the model to learn the specific task’s input-output mapping. The model is trained to minimize the difference between its predictions and the ground truth labels, thereby improving its performance on the task.

Challenges and Limitations of PEFT

While PEFT offers numerous advantages, it also faces certain challenges:

- Finding the Optimal Subset of Parameters: Identifying the most impactful parameters for a given task can be challenging.

- Balancing Performance and Efficiency: Reducing the number of trainable parameters can potentially lead to a performance drop compared to full fine-tuning.

- Convergence Difficulties: Training a small subset of parameters while keeping the rest frozen can sometimes lead to convergence issues.

To overcome these challenges, multiple PEFT methods emerged; let’s start from the first and most famous: LoRA.

LoRA: Low-Rank Adaptation

LoRA, introduced by Hu et al. (2021), is based on the hypothesis that weight updates during fine-tuning exhibit a low “intrinsic rank,” meaning they can be effectively represented by low-rank matrices. Instead of updating the original pre-trained weights, LoRA injects trainable rank decomposition matrices (low-rank adapters) into each layer of the Transformer architecture.

Key Features:

- Low-rank Decomposition: Weight updates are represented as the product of two low-rank matrices, significantly reducing the number of trainable parameters.

- Frozen Pre-trained Weights: The original pre-trained weights remain fixed, ensuring minimal changes to the model’s core knowledge.

- No Inference Latency: The learned adapters can be merged with the pre-trained weights during deployment, resulting in no additional inference latency.

Advantages:

- Reduced Memory Footprint: LoRA significantly reduces the memory required for training and storage, making it feasible to fine-tune large models on limited hardware resources.

- Faster Training: Training fewer parameters results in faster convergence, enabling efficient model adaptation.

- Quick Task Switching: Replacing only the small adapters allows for rapid switching between different downstream tasks.

Limitations:

- Potential Performance Gap: LoRA can sometimes result in a performance gap compared to full fine-tuning, particularly for complex tasks requiring significant weight updates.

GaLore: Gradient Low-Rank Projection

GaLore, proposed by Zhao et al. (2024), addresses the limitations of LoRA by leveraging the low-rank structure of the gradient matrix instead of approximating the weight matrix itself. It reduces memory usage by projecting the gradient into a low-rank subspace before applying the optimizer.

Key Features:

- Gradient Projection: GaLore projects the gradient into a low-rank subspace, reducing the size of optimizer states and minimizing memory usage.

- Full-Parameter Learning: Unlike LoRA, GaLore allows for the learning of full-rank weights without compromising memory efficiency.

- Dynamic Subspace Switching: GaLore can switch across low-rank subspaces during training, enabling more comprehensive exploration of the parameter space.

Advantages:

- Improved Memory Efficiency: GaLore achieves greater memory reduction compared to LoRA, especially for optimizer states.

- Enhanced Performance: GaLore can match or exceed the performance of full-rank training while requiring significantly less memory.

- Compatibility with Existing Optimizers: GaLore can be readily integrated with various optimizers, such as Adam, Adafactor, and 8-bit Adam.

Limitations:

- Throughput Overhead: GaLore incurs a slight throughput overhead compared to 8-bit Adam due to the computation of projection matrices.

QLoRA: Efficient Fine-tuning of Quantized LLMs

QLoRA, developed by Dettmers et al. (2023), tackles the memory challenge by quantizing the pre-trained LLM to 4-bit precision and then adding a small set of learnable LoRA adapters. It reduces memory usage enough to fine-tune a 65B parameter model on a single 48GB GPU while preserving the performance of full 16-bit fine-tuning.

Key Features:

- 4-bit Quantization: QLoRA quantizes the pre-trained model to 4-bit precision, drastically reducing the memory footprint.

- LoRA Adapters: Trainable LoRA adapters are added to the quantized model to fine-tune for specific downstream tasks.

- NormalFloat (NF4) Quantization: QLoRA introduces NF4, an information-theoretically optimal data type for quantizing normally distributed data.

Advantages:

- Unprecedented Memory Savings: QLoRA achieves remarkable memory reduction, enabling the fine-tuning of large models on consumer-grade GPUs.

- High-Fidelity Performance: QLoRA maintains the performance of 16-bit full fine-tuning despite using 4-bit quantization.

- Compatibility with LoRA: QLoRA leverages the benefits of LoRA for parameter-efficient fine-tuning.

Limitations:

- Potential Performance Degradation at Lower Bit Precisions: While highly effective at 4-bit precision, QLoRA might experience performance degradation at lower bit precisions.

DoRA: Weight-Decomposed Low-Rank Adaptation

DoRA, presented by Liu et al. (2024), builds upon LoRA by decomposing the pre-trained weight into two components: magnitude and direction. It fine-tunes both components, leveraging LoRA for directional updates to minimize the number of trainable parameters.

Key Features:

- Weight Decomposition: DoRA decomposes the pre-trained weights into magnitude and direction components.

- Magnitude and Directional Fine-tuning: Both components are fine-tuned, allowing for more granular optimization compared to LoRA.

- LoRA for Directional Updates: LoRA is employed to efficiently update the directional component, ensuring parameter efficiency.

Advantages:

- Enhanced Learning Capacity: DoRA improves upon LoRA’s learning capacity by separately fine-tuning magnitude and direction.

- Improved Training Stability: Weight decomposition leads to more stable optimization of the directional component.

- No Inference Overhead: Like LoRA, DoRA can be merged with the pre-trained weights during deployment, resulting in no additional inference latency.

Limitations:

- Increased Training Memory: DoRA requires more training memory compared to LoRA due to the separate optimization of magnitude and direction components.

LoftQ: LoRA-Fine-Tuning-Aware Quantization

LoftQ, introduced by Li et al. (2023), addresses the performance gap observed when combining quantization and LoRA fine-tuning. It simultaneously quantizes the LLM and finds an optimal low-rank initialization for LoRA, mitigating the discrepancy between the quantized and full-precision models.

Key Features:

- Joint Quantization and Low-Rank Approximation: LoftQ jointly optimizes the quantization and low-rank approximation of pre-trained weights.

- Improved LoRA Initialization: LoftQ provides a better initialization for LoRA fine-tuning, alleviating the quantization discrepancy.

- Alternating Optimization: LoftQ employs alternating optimization to find a closer approximation of the pre-trained weights in the low-precision regime.

Advantages:

- Enhanced Generalization: LoftQ significantly improves generalization in downstream tasks compared to QLoRA.

- Effectiveness in Low-Bit Regimes: LoftQ excels in challenging 2-bit and mixed-precision quantization scenarios.

- Compatibility with Different Quantization Methods: LoftQ can be used with various quantization techniques, including NormalFloat and uniform quantization.

Limitations:

- Increased Computational Cost: LoftQ incurs a slight computational overhead due to the alternating optimization process.

Comparative Performance Summary

The following table provides a general comparison based on relative performance, so actual results may vary depending on the specific task, dataset, and model size.

| Method | Memory Efficiency | Performance | Inference Latency | Key Feature |

| Full Fine-tuning | Low | High | None | Updates all parameters |

| LoRA | High | Moderate | None | Low-rank decomposition of weight updates |

| GaLore | Very High | High | Low | Gradient projection into low-rank subspace |

| QLoRA | Very High | High | None | 4-bit quantization and LoRA adapters |

| DoRA | Moderate | High | None | Weight decomposition and LoRA for direction |

| LoftQ | Very High | Very High | None | Joint quantization and LoRA initialization |

Conclusion

The quest for efficient fine-tuning techniques has led to the development of cool methods like LoRA, GaLore, QLoRA, DoRA, and LoftQ. Each of these offers a unique way to tweak LLMs for specific tasks without needing tons of computing power or memory, making it much easier for researchers and anyone else who wants to fine-tune large models without sophisticated hardware.

As this field keeps growing and evolving, we’re going to see even more awesome and effective ways to adapt these giant models to do what we want. The future’s looking bright, and we’re not just fine-tuning our models… We’re tuning into some major breakthroughs!

Leave a comment